Why document preprocessing plays an important role in boosting OCR performance

Why do we need binarization?

We at Gini are processing thousands of document images every hour. The documents come in a vast variety of formats and styles. Some documents are photographed with flash, some are affected by shadows. Some are crisp and clear, and some are blurred and out of focus. Some use colorful inks, and some are printed on non-white paper. All these factors negatively affect the OCR (Optical Character Recognition) performance and information extraction. In fact, depending on the lighting conditions, the ink on some documents can appear lighter than the (white) paper on others. Just remember the famous checkerboard shadow illusion. In the image below, believe it or not, squares A and B are of the same color.

Figure 1 Checkerboard shadow illusion (source).

Is global thresholding good enough?

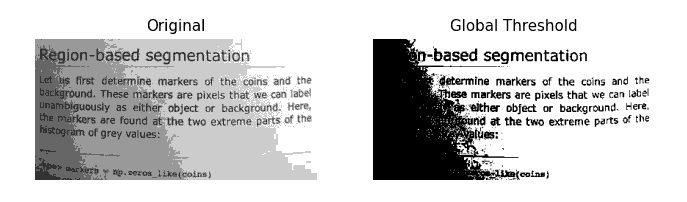

Therefore, in order to minimize the effect of the visual artifacts mentioned above, the documents are typically binarized before running the OCR. This helps the OCR to focus on the important information since the binarization filters out everything that is not related to the information extraction. One of the straightforward binarization methods is the global thresholding where all pixels darker than a certain threshold color are considered to be ink. However, given the large variety of document styles and capturing conditions, using the simple global thresholding approach is likely to produce unusable results. As illustrated in Figure 2, one single binarization threshold can be too aggressive for some parts of an image and too weak for others.

Figure 2 Document binarization using one single threshold (source).

Adaptive binarization

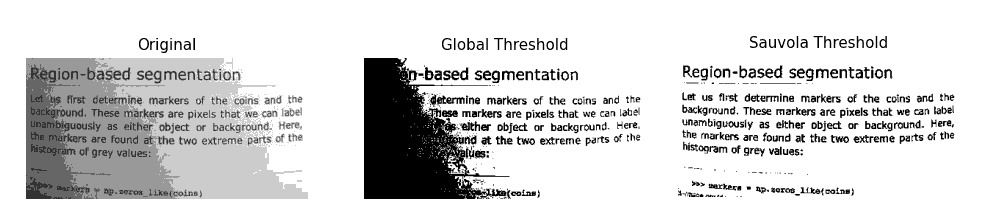

Fortunately, some approaches are able to account for the local visual characteristics of document images and adjust the threshold accordingly. One of the classic thresholding approaches in image processing is the so-called Sauvola method. It computes the threshold for each pixel individually, taking into account the average illumination and contrast in a (close) vicinity of the pixel in question. This leads to much more precise and robust binarization results, as illustrated in Figure 3.

Figure 3 Document binarization using different thresholding methods (source).

However, even though the Sauvola algorithm is widely used in document processing, it does have considerable limitations. In fact, before applying the algorithm, we should ask ourselves the following questions:

- How large should the pixel vicinity be? A certain size may work for document titles but may be overkill for footnotes written with much smaller fonts.

- How does the local contrast affect the threshold? In other words, how much darker (in relative terms with respect to the background) should a pixel be in order to consider it as ink and not a shadow, noise, or other photo artifacts?

In the standard Sauvola method, these issues are controlled by a set of parameters. However, different document types and lighting conditions would require different parameter settings in order to obtain the optimal result. And what about blurry pixels, missing ink, and camera noise?

Deep learning-based binarization and automatic data generation

Our objective is to leverage the flexibility of the Sauvola method in an even more adaptive way. In order to do so, we focus on adaptively tuning the aforementioned hyper-parameters for every pixel. The approach is based on the SauvolaNet architecture, a CNN-based solution that estimates the hyper-parameters locally. We have gone several steps further and enhanced the SauvolaNet architecture by adding an adaptive color space converter, a post-processing module with residual bypass, and a restoration subnet to account for defects and/or missing pixels (e.g. missing ink).

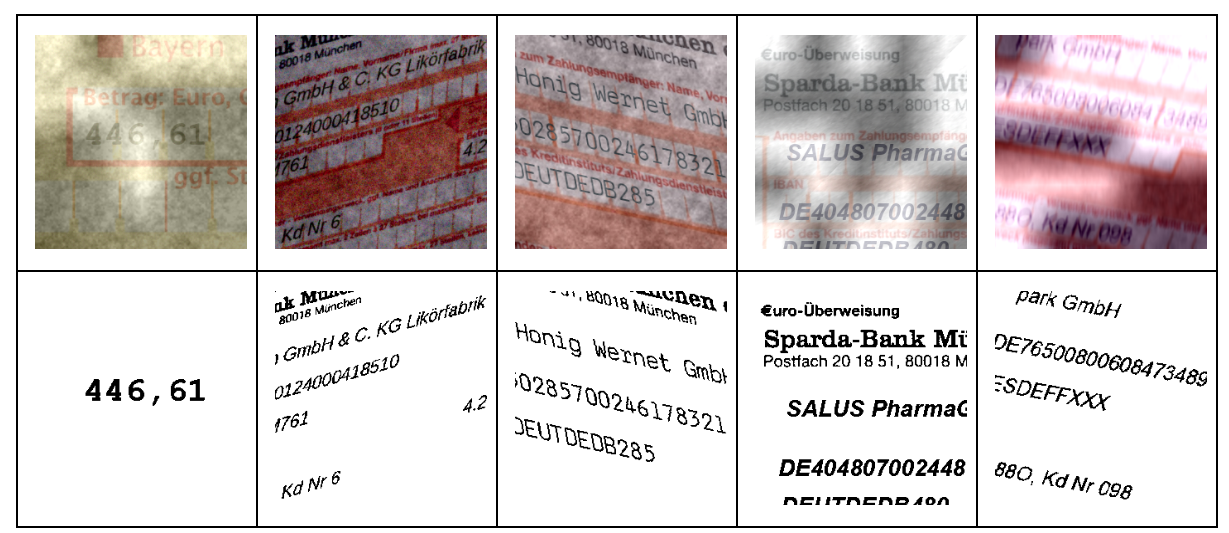

For our binarization, like for any supervised machine learning approach, the data is the key factor. Manual labeling of binarization maps is a tedious job and poses a prohibitive effort to build a dataset with a meaningful size. Therefore, we had to adopt an alternative approach. For that purpose, we built an automatic generator of documents and the corresponding binarization maps (ground truth). The generator fills the documents with fake data and applies various visual augmentation techniques to achieve realistic looks. Snippets of these artificially generated documents and the corresponding binarization maps are shown in Figure 4.

Figure 4 Artificially generated documents and the corresponding binarization maps.

Our results

And what do our results look like? A comparison of our approach and the Sauvola thresholding is illustrated in Figure 5. Our binarization approach is more robust and accurate and, in turn, boosts the information extraction performance by up to 9% with respect to the classic Sauvola thresholding approach.

| Original image | Sauvola thresholding | Our approach |

Figure 5 Comparison of the classic Sauvola thresholding and our approach. |

||

We have shown that proper document preprocessing plays a key role in information extraction. If we manage to get rid of all irrelevant information from the picture, we can significantly boost the OCR performance. This is where our binarization solution comes into play and prepares the image for subsequent processing within our photo payment solution. Taking a good and clean picture of a document takes some time and effort. Thanks to our binarization solution, the users of our photo payment can spend this time on something else :-)

. . .

If you enjoy mastering challenges of machine learning like this one, you should probably check out our open positions. We are always looking for excellent developers to join us!

At Gini, we want our posts, articles, guides, white papers and press releases to reach everyone. Therefore, we emphasize that both female, male, and other gender identities are explicitly addressed in them. All references to persons refer to all genders, even when the generic masculine is used in content.